torch-pesq¶

Loss function inspired by the PESQ score¶

Implementation of the widely used Perceptual Evaluation of Speech Quality (PESQ) score as a torch loss function. The PESQ loss alone performs not good for noise suppression, instead combine with scale invariant SDR. For more information see 1,2.

Installation¶

To install the package just run:

$ pip install torch-pesq

Usage¶

import torch

from torch_pesq import PesqLoss

pesq = PesqLoss(0.5,

sample_rate=44100,

)

mos = pesq.mos(reference, degraded)

loss = pesq(reference, degraded)

print(mos, loss)

loss.backward()

Comparison to reference implementation¶

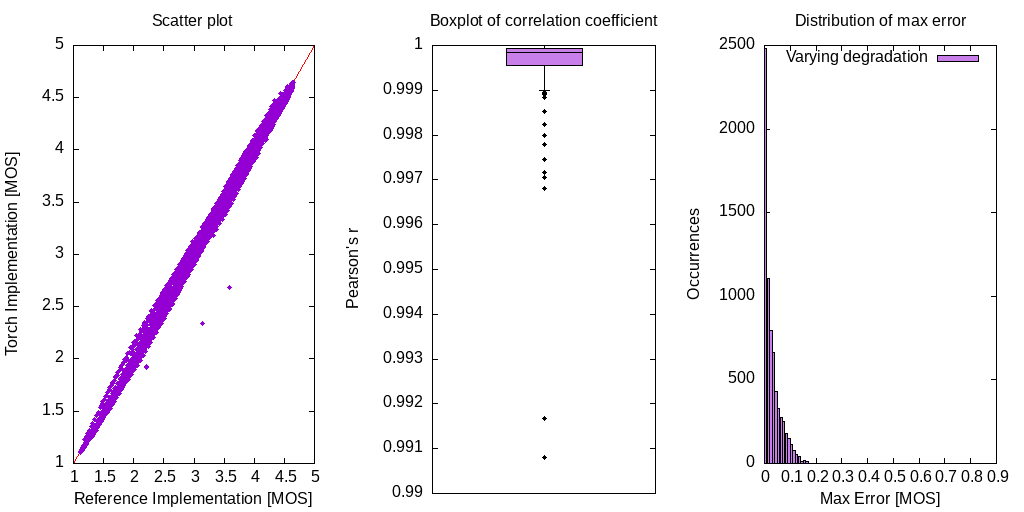

The following figures uses samples from the VCTK 1 speech and DEMAND 2 noise dataset with varying mixing factors. They illustrate correlation and maximum error between the reference and torch implementation:

The difference is a result from missing time alignment implementation and a level alignment done with IIR filtering instead of a frequency weighting. They are minor and should not be significant when used as a loss function. There are two outliers which may degrade results and further investigation is needed to find the source of difference.

Validation improvements when used as loss function¶

Validation results for fullband noise suppression:

Noise estimator: Recurrent SRU with soft masking. 8 layers, width of 512 result in ~1586k parameters of the unpruned model.

STFT for signal coding: 512 window length, 50% overlap, hamming window

Mel filterbank with 32 Mel features

The baseline system uses L1 time domain loss. Combining the PESQ loss function together with scale invariant SDR gives improvement of ~0.1MOS for PESQ and slight improvements in speech distortions, as well as a more stable training progression. Horizontal lines indicate the score of noisy speech.

Relevant references¶

Contents: